When the API Goes Down: Business Continuity Plans

Man, let me tell you, we just went through a nightmare, and I figured I should write it all down before I forget the sheer panic. The title says it all: the API went down. Not just a little hiccup, but a full-blown, system-wide collapse that started mid-morning and lasted until well into the night. This is how we handled—or rather, didn’t handle—it initially, and what we built afterwards to make sure it never happens again.

It started with a few support tickets trickling in. Users complaining about slow load times, then errors popping up when they tried to process payments. Nothing alarming at first; maybe a database overload. I jumped onto the monitoring dashboard, and that’s when my stomach dropped. Latency spikes looked like the Himalayas, and error rates were hitting 99%. Our main microservice gateway was essentially dead.

My first move was to try the usual quick fixes. Restart the pods. Clear the cache. Check the logs. Nothing. The logs were just spitting out connection timed out errors. It quickly became clear this wasn’t just a simple code deployment gone bad. I pulled in the senior engineers. The war room started in Slack, then moved to an urgent Zoom meeting that nobody was dressed for.

We spent the first hour chasing ghosts. Was it the database cluster? No, the DB metrics looked fine, though underutilized because nothing could reach it. Was it the load balancer? Nope, traffic was hitting the LB fine, but dying right after it entered the ingress. Finally, the infrastructure guy—bless his soul—dug deep into the network layer. Turns out, a critical DNS configuration file got corrupted during a routine, scheduled infrastructure update that was supposed to be completely non-disruptive. Classic.

The problem wasn’t fixing the DNS record; that took maybe 20 minutes once we pinpointed the exact file and change needed. The real problem was the domino effect. Because the API was down for so long, our job queues backed up massively. Data integrity checks failed everywhere. When we brought the API back online, it immediately got hammered by months of queued jobs suddenly trying to run all at once. We had to manually throttle everything back, one service at a time, spending the next twelve hours babysitting the system to prevent cascading failures.

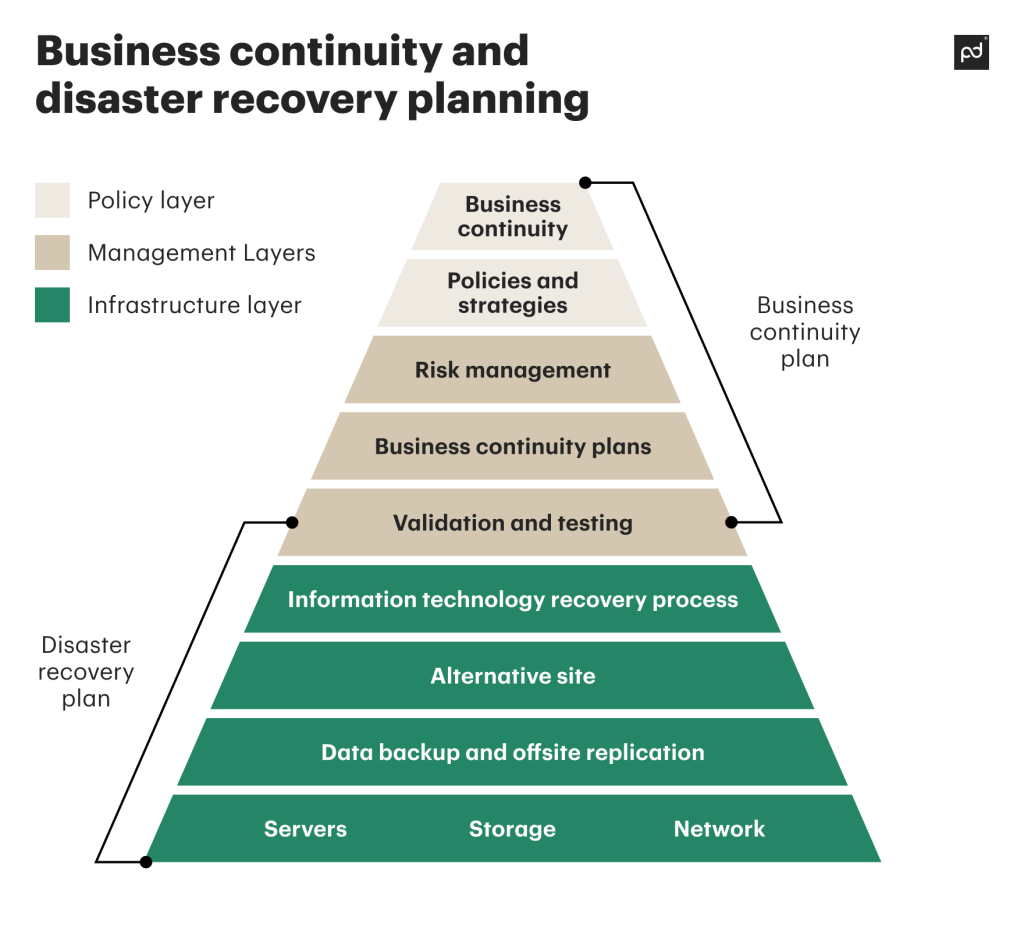

That day was a brutal lesson in business continuity. We had protocols for code bugs, resource scaling, and even database failures. But a full network-level API blackout? Our plan was basically “run around screaming.”

Building a Real Continuity Plan

The week after, I made sure we dedicated three full sprints to nothing but hardening our resilience and building a proper BCP (Business Continuity Plan) specifically for API failure.

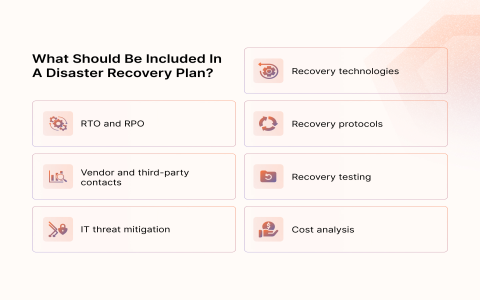

Here’s what we put into place:

- Dedicated Outage Communication Channel: We set up a read-only Slack channel that only infrastructure and senior management can post to during an incident. No engineers clogging up the feed with theories. Just facts: what’s down, what the ETA is, and when it’s resolved.

- Minimal Viable Service Tiers: We identified the two or three endpoints absolutely necessary for basic function—login, and viewing core user data. Everything else (analytics, secondary features, payment processing) is now configured to gracefully degrade or fail fast, dumping jobs into an emergency queue that can be processed later.

- Automated Health Checks and Rollbacks: We wrote a script that runs every five minutes. If global latency goes above a specific threshold for more than two consecutive checks, it automatically triggers a rollback to the previous infrastructure configuration state. No more reliance on someone realizing an update went sideways.

- Pre-Defined Failover DNS: This was the biggest fix. We established a secondary DNS provider and built automated scripts to switch all traffic instantly if the primary health checks fail. It costs a bit more, but sitting idle because the entire API is unreachable costs way more.

The crucial part was defining the “When.” If the API is down for more than 15 minutes, we execute the full BCP. Everyone knows their role. No panic, just execution. We ran a full dry run last month, simulating a DNS corruption exactly like the one that killed us. The automated failover kicked in, and we had the system running on the secondary DNS provider in under eight minutes. It was messy, but we were functional.

The pain of that day definitely forced us to grow up in terms of operations. You can’t predict every failure, but you sure as hell can plan how to stay alive when the inevitable happens. If your BCP is just a dusty document, go test it. Seriously.